Building an MCP-Powered Blog Post Generator with Nextra and LLMs

Updated on

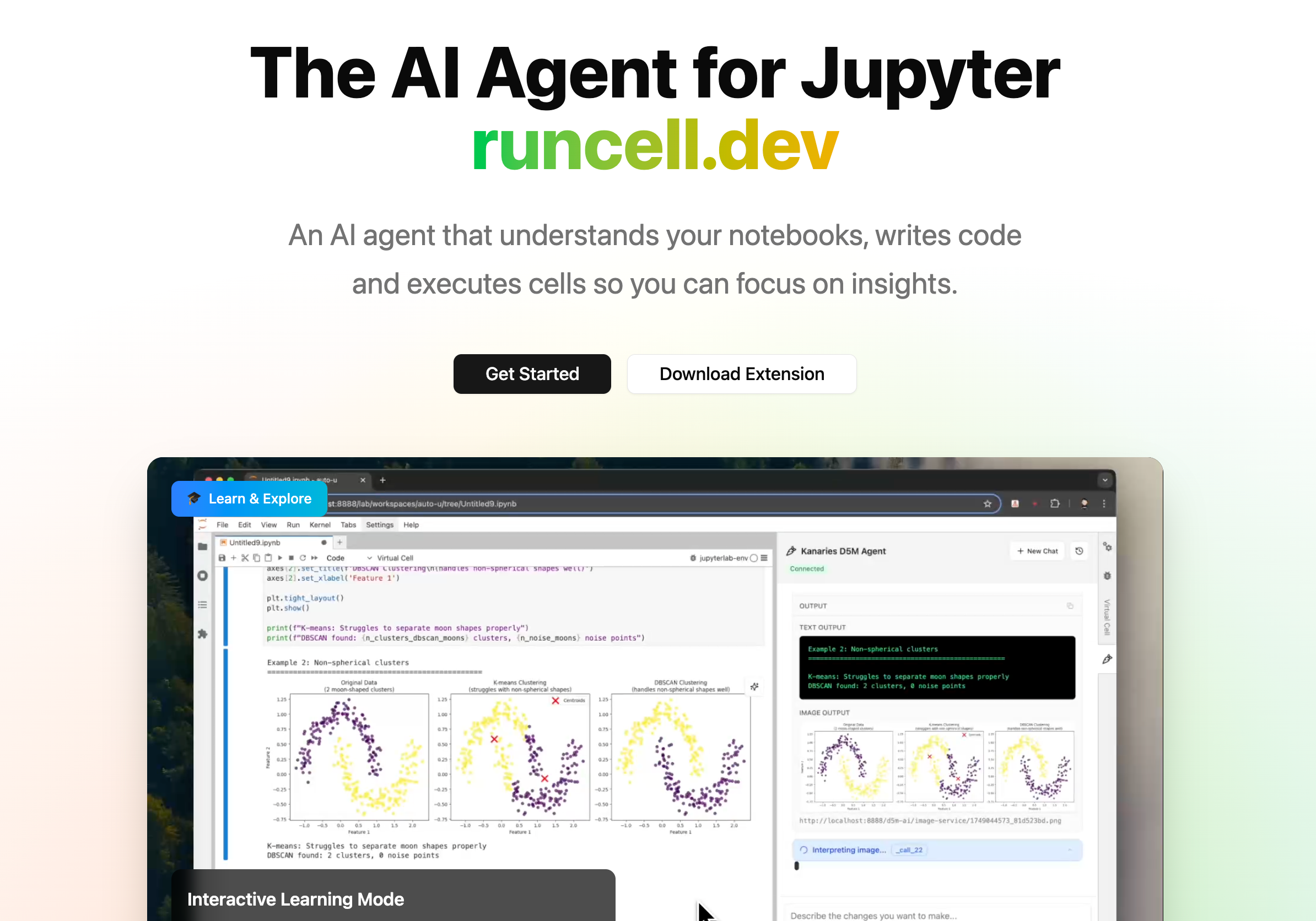

The AI Agent for Jupyter Notebooks

An AI agent that understands your notebooks, writes code and executes cells so you can focus on insights. Accelerate your data science workflow with intelligent automation that learns from your coding patterns.

In this tutorial, we'll create a technical solution that lets you chat with a large language model (LLM) and ask it to generate content for your Nextra-based blog. The content will be written in MDX and automatically committed to your blog's GitHub repository as new posts. We'll explore two implementations of this Model Context Protocol (MCP) integration:

- Serverless via GitHub Actions: Use a GitHub Actions workflow to handle the entire process – invoking the LLM and committing the generated post.

- Hosted via Next.js API: Implement a simple MCP server as a Next.js API endpoint (using the

jojocys/nextra-agent-blogas our example blog platform) which acts as the MCP client. This endpoint will call the LLM and push new posts to GitHub in real-time.

MCP is an open standard for connecting AI assistants to external tools and data. It provides a universal interface for AI models to interact with systems like cloud services, databases – even platforms like GitHub and Slack – without custom integration for each tool (Model Context Protocol (MCP) Explained (opens in a new tab)). In other words, MCP enables AI agents to use "tools" to take actions beyond their base knowledge. For example, an AI assistant could use MCP to perform tasks such as sending emails, deploying code changes, or publishing blog posts (Build and deploy Remote Model Context Protocol (MCP) servers to Cloudflare (opens in a new tab)). Here, our "tool" will be the ability to create a new MDX file and commit it to a Git repository (the blog).

By the end of this guide, you'll have a working setup where you (as the sole user) can request a blog article from an AI agent and have it appear on your site automatically. We'll focus on how the pieces connect (MCP client↔︎LLM and LLM↔︎GitHub) and assume a single-user scenario with no additional authentication.

Overview of the Solution

Before diving into code, let's outline the architecture and workflow:

-

Blog Platform: We use a Next.js site with the Nextra "blog theme" (such as the

jojocys/nextra-agent-blogrepository) to host our content. Blog posts are stored as MDX files (Markdown with JSX) in the repository (e.g. in apages/postsdirectory). Nextra will display any MDX file in this folder as a blog post, using its frontmatter (title, date, etc.) and content. -

LLM (AI Assistant): We'll use an AI model (for example OpenAI's GPT or Anthropic's Claude) to generate blog post content. The model will receive a prompt (possibly via a chat interface or an API call) describing what article to write, and it will produce an MDX-formatted article in response.

-

MCP Client & Server: In MCP terms, the LLM acts as a host that can call external tools via a client-server interface. We will implement a minimal MCP setup:

- In the GitHub Actions approach, the GitHub Actions workflow itself plays the role of the MCP client. It initiates the conversation with the LLM (host) and then executes the "write post" tool by committing to the repo.

- In the Next.js API approach, we'll create a custom API route that acts as both an MCP client (handling LLM requests) and an MCP server for our blog repo (providing the tool to create commits). Essentially, this endpoint will be a bridge between the LLM and GitHub.

-

GitHub Integration: Both approaches will use GitHub's API to add the new post to the repository. This could be done via direct Git operations or via GitHub's HTTP API. We'll see examples of each. (Notably, Anthropic's MCP ecosystem already includes a pre-built GitHub connector that supports repository operations (GitHub - modelcontextprotocol/servers: Model Context Protocol Servers (opens in a new tab)), but here we'll learn by building it ourselves.)

With this context in mind, let's start with the serverless GitHub Actions method.

Approach 1: Serverless Blog Post Generation with GitHub Actions

In this approach, we use a GitHub Actions workflow to generate and publish blog posts on demand. This means you don't need to run any dedicated server – GitHub will spin up a runner to execute the steps whenever triggered. The workflow will perform the following steps:

- Trigger: You (the user) trigger the workflow (e.g. manually or via a repository dispatch event) and provide a prompt or topic for the blog post.

- LLM Invocation: The workflow calls the LLM (via its API, e.g. OpenAI or Claude) with a prompt asking for an MDX blog post on the given topic.

- File Creation: It captures the LLM's response (the MDX content) and saves it as a new

.mdxfile in the blog repository, including a YAML frontmatter (title, date, etc.). - Commit to GitHub: The new file is committed and pushed to the repository (e.g., to the

mainbranch), making the blog post live.

Let's create the workflow file. In your blog repo (the one using Nextra), add a workflow file at .github/workflows/llm-agent.yml:

name: LLM Blog Post Generator

# Allow manual trigger of this workflow

on:

workflow_dispatch:

inputs:

prompt:

description: "Topic or prompt for the blog post"

required: true

type: string

permissions:

contents: write # allow pushing to the repo

jobs:

generate_post:

runs-on: ubuntu-latest

steps:

# Step 1: Check out the repository code

- name: Check out repo

uses: actions/checkout@v3

# Step 2: Install tools (jq for JSON parsing, if not pre-installed)

- name: Install jq

run: sudo apt-get -y install jq

# Step 3: Call the LLM API to generate content

- name: Generate blog content with OpenAI

env:

OPENAI_API_KEY: ${{ secrets.OPENAI_API_KEY }}

run: |

echo "Prompt: ${{ github.event.inputs.prompt }}"

# Prepare the OpenAI API request payload

REQUEST_DATA=$(jq -n --arg prompt "${{ github.event.inputs.prompt }}" '{

"model": "gpt-3.5-turbo",

"messages": [

{"role": "system", "content": "You are a technical blogging assistant. Write an MDX blog post with a YAML frontmatter (title, date, description, type: posts) and content on the given topic."},

{"role": "user", "content": $prompt}

]

}')

# Call OpenAI API for chat completion

RESPONSE=$(curl -sS -H "Content-Type: application/json" \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-d "$REQUEST_DATA" \

https://api.openai.com/v1/chat/completions)

# Extract the assistant's message content (the MDX text)

POST_CONTENT=$(echo "$RESPONSE" | jq -r '.choices[0].message.content')

# Create a filename for the new post (slug from date)

TIMESTAMP=$(date +"%Y-%m-%d-%H%M")

FILENAME="posts/llm-post-$TIMESTAMP.mdx"

echo "Creating new post file: $FILENAME"

echo "$POST_CONTENT" > $FILENAME

# Step 4: Commit and push the new post

- name: Commit changes

run: |

git config user.name "github-actions"

git config user.email "github-actions@users.noreply.github.com"

git add posts/*.mdx

git commit -m "Add new blog post generated by LLM"

git pushLet's break down what this workflow does:

-

Trigger & Inputs: We use

workflow_dispatchso that the workflow can be run manually from the Actions tab, supplying apromptinput. For example, you could enter "Explain how to set up a Next.js blog with MCP" as the prompt when running the workflow. -

Permissions: We set

contents: writeto allow the action to push commits. The workflow uses the built-inGITHUB_TOKEN(which is automatically provided) to authenticate the push. (By default, theGITHUB_TOKENhas write access to the repo content in Actions.) -

Step 1: Check out the repository code. This gives us the repository files on the runner, so we can add a new file.

-

Step 2: Ensure

jq(a JSON parser) is available for processing the API response. (Ubuntu runners often have it, but we explicitly install to be safe.) -

Step 3: "Generate blog content with OpenAI" – this is where we call the LLM:

- We load the OpenAI API key from secrets. Make sure to add your

OPENAI_API_KEYin the repository's Settings → Secrets (or use GitHub's encrypted actions variables). If you prefer Anthropic's Claude, you'd use a different API endpoint and key (we'll discuss that soon). - We construct the API request JSON using

jq. We use the Chat Completion API with thegpt-3.5-turbomodel in this example. The system message instructs the AI to respond with an MDX formatted blog post, including a YAML frontmatter containingtitle,date,description, andtype: posts. The user message is the prompt provided to the workflow. - We call the OpenAI API via

curl. The response is captured in a variable. - Using

jq, we extract the content of the AI's answer (the.choices[0].message.contentfield from the JSON) and save it inPOST_CONTENT. This should be the complete MDX text. - We generate a filename for the new post. Here we use the current date/time (to the minute) to create a slug like

llm-post-2025-04-15-1211.mdx. This ensures a unique name. In a real setup, you might parse thetitlefrom the frontmatter and create a slug, but using a timestamp or a user-provided slug is simpler for automation. - We then write the content to the new file in the

posts/directory.

- We load the OpenAI API key from secrets. Make sure to add your

-

Step 4: Commit and push:

- We configure a commit author (using GitHub's provided noreply email).

- We add the new file and create a commit with a message (e.g., "Add new blog post generated by LLM"). We then push the commit to the repository. Once pushed to the

mainbranch, this new post will become available on the blog (assuming your blog is automatically deployed from the repo, such as via Vercel or GitHub Pages).

After the workflow runs, you should see a new file in the repository (for example, posts/llm-post-2025-04-15-1211.mdx). It will contain a frontmatter section and the content generated by the AI. Nextra will include this in the list of posts on your blog. You might want to pull the changes locally to review or edit the content as needed.

Testing the Workflow: Go to your repository's "Actions" tab, select the LLM Blog Post Generator workflow, and run it with a test prompt. The workflow logs will show the prompt, the file creation, and the commit step. Once completed, refresh your repository to see the new post file. If your site is auto-deployed (for example, Vercel might pick up the commit), the new blog post will be live. If not, you may need to trigger a site rebuild/deploy to see the changes on the live site.

Note: This approach can also be triggered in other ways. For instance, you could configure it to run when you open a GitHub Issue with a certain label or comment – the action could read the issue text as the prompt. For simplicity, we used a manual trigger here. Also, keep in mind that the OpenAI API call may incur costs and latency, and the content isn't reviewed by a human before publishing. In a real scenario, you might want to have the workflow create a Pull Request with the new post (instead of committing directly to main) so you can review the AI's writing before it goes live.

Finally, if you prefer using Anthropic's Claude instead of OpenAI, you can swap out the API call in step 3. For example, Claude's API (via the Anthropomorphic API) would use a different endpoint (https://api.anthropic.com/v1/complete for the older completion API, or the newer Claude v1 chat completion endpoint) and a different payload structure (with a prompt that includes something like \n\nHuman: {your prompt}\n\nAssistant: and specifying a model like claude-2). Ensure you set your Claude API key as a secret and adjust headers accordingly. The rest of the workflow (writing the file and committing) remains the same.

Approach 2: Hosted MCP Server via a Next.js API Endpoint

In this approach, we embed the MCP client/server logic into the blog application itself. We'll create a Next.js API route that accepts a request (containing, say, a prompt or instructions), invokes the LLM to generate the MDX content, and then commits the content to the GitHub repository. This could be used in a web UI to have a live chat or form where you ask the AI for a post, rather than going through GitHub Actions.

This method requires your Next.js app (the blog) to have access to the GitHub repo. Typically, you'd deploy your app on a platform (like Vercel or a self-hosted environment) and provide a GitHub Personal Access Token (PAT) to the server so it can push commits. The PAT should have permissions to push to your blog's repo.

Setup: In your Next.js project, add the necessary API keys as environment variables. For example, in a .env.local file (which you do not commit):

OPENAI_API_KEY=<your OpenAI API key>

GITHUB_PAT=<your GitHub Personal Access Token>

GITHUB_REPO_OWNER=<github username or org>

GITHUB_REPO_NAME=<repo name, e.g., nextra-agent-blog>Make sure to include these in your deployment environment as well. The GitHub token should have repo scope (at least content write access). If the blog repo is the same as the Next.js project, the owner/name will be that repository; if you plan to push to a separate content repo, set those accordingly.

Next, create a new API route. In a Next.js 13 project with the pages router, you can create a file at pages/api/generate-post.js (or .ts if using TypeScript). For Next.js 13 app router, you'd create a route under the app directory. For simplicity, we'll show a pages API route example:

// pages/api/generate-post.js

import { Octokit } from "@octokit/rest";

import { Configuration, OpenAIApi } from "openai";

export default async function handler(req, res) {

const { prompt } = req.body;

if (!prompt) {

return res.status(400).json({ error: "No prompt provided" });

}

try {

// 1. Initialize OpenAI client

const config = new Configuration({ apiKey: process.env.OPENAI_API_KEY });

const openai = new OpenAIApi(config);

// 2. Call the OpenAI API to get a blog post content

const chatCompletion = await openai.createChatCompletion({

model: "gpt-3.5-turbo",

messages: [

{ role: "system", content: "You are a technical blogging assistant. Write an MDX blog post with a YAML frontmatter (title, date, description, type: posts) for the given topic." },

{ role: "user", content: prompt }

]

});

const mdxContent = chatCompletion.data.choices[0].message.content;

// 3. Prepare the new post file details

// Extract title from frontmatter for filename (optional improvement)

const titleMatch = mdxContent.match(/^title:\s*\"?(.+)\"?/m);

const titleText = titleMatch ? titleMatch[1] : "untitled-post";

// Create a slug from title (lowercase, hyphens, no special chars)

const slug = titleText.toLowerCase().replace(/[^a-z0-9]+/g, "-").replace(/(^-|-$)/g, "");

const dateStr = new Date().toISOString();

const fileName = `posts/${slug || "untitled"}-${Date.now()}.mdx`;

// If date not in frontmatter, you can prepend or replace it

// (Assume the LLM included date, but if not, we can insert it)

let postContentFinal = mdxContent;

if (!/^date:/m.test(mdxContent)) {

postContentFinal = postContentFinal.replace(/^(title:.*)$/m, `$1\ndate: ${dateStr}`);

}

// Ensure type: posts is present

if (!/^type:\s*posts/m.test(postContentFinal)) {

postContentFinal = postContentFinal.replace(/^(title:.*)$/m, `$1\ntype: posts`);

}

// 4. Commit the file to GitHub using Octokit

const octokit = new Octokit({ auth: process.env.GITHUB_PAT });

const owner = process.env.GITHUB_REPO_OWNER;

const repo = process.env.GITHUB_REPO_NAME;

const path = fileName;

const message = `Add new post "${titleText}" via MCP`;

const contentEncoded = Buffer.from(postContentFinal, "utf-8").toString("base64");

// Create or update the file in the repo

await octokit.rest.repos.createOrUpdateFileContents({

owner,

repo,

path,

message,

content: contentEncoded,

committer: {

name: "MCP Bot",

email: "mcp-bot@example.com"

},

author: {

name: "MCP Bot",

email: "mcp-bot@example.com"

}

});

return res.status(200).json({ message: "Post created", slug });

} catch (error) {

console.error("Error generating post:", error);

return res.status(500).json({ error: error.message });

}

}In this code:

-

We use the official OpenAI Node.js SDK (

openaipackage) to simplify calling the model. We send a very similar prompt as in the Actions workflow: the system prompt instructs the assistant to produce an MDX blog post with a proper frontmatter, and the user prompt is the topic from the request. -

The AI's response is captured in

mdxContent. We then attempt to parse out the title from the frontmatter using a regex. We form a slug from that title for the filename. (If the model didn't follow instructions perfectly, we have fallbacks: an "untitled-post" slug, and we also ensure thedateandtype: postsfields are present in the frontmatter by editing the text.) -

We create a file path like

posts/your-post-title-<timestamp>.mdx. The timestamp (orDate.now()) ensures uniqueness in case of similar titles and avoids collisions. -

We then instantiate Octokit (GitHub's REST API client for JavaScript) with our PAT and use it to create or update file contents in the repository. We specify the

owner,repo, filepath, a commitmessage, and the file content in Base64 encoding (as required by GitHub's API). We also provide dummy committer/author info (you can use your info or keep a bot identity). -

If successful, we return a JSON response with a success message and maybe the slug or any info you want (this could be used by a front-end to show the new post link). In case of errors, we catch and log them, returning a 500 status with the error message.

Using the API: Now you can deploy this Next.js app (with the environment variables set). To generate a post, send a POST request to /api/generate-post with JSON body {"prompt": "Your desired topic or question"}. For example, using curl from your machine or a REST client:

curl -X POST https://<your-deployed-site>/api/generate-post \

-H "Content-Type: application/json" \

-d '{"prompt": "How to implement Model Context Protocol in a Next.js application?"}'The server will respond once the post is created. Behind the scenes, the LLM writes the content and the server commits it. After a few seconds, you should find the new MDX file in your GitHub repository (check the commit history for the new commit with message "Add new post ... via MCP"). If your site redeploys on git push (common with static site hosts or CI setups), the new post will become visible on the blog.

This method effectively creates a simple MCP server endpoint: it exposes a capability (create a blog post) to be triggered by an AI. In a real MCP setup, an AI agent could discover this tool and invoke it as needed. In our case, we've tightly coupled it – the endpoint calls the AI and then does the action – but one could separate concerns by first letting the AI decide to call a "commit to blog" tool. For instance, you might design a prompt where the AI's response could indicate an action (like in a JSON payload) and then have the server perform it. That would be a more explicit MCP tool usage pattern. However, our integrated approach is simpler to implement and sufficient for a single-user system.

A few things to note for this Next.js approach:

-

Ensure your repository has a

postsdirectory (or adjust the path in the code to where your blog content lives). Thejojocys/nextra-agent-bloglikely uses a similar structure for posts. The MDX frontmatter should include at leasttitle,date, andtype: postsas shown, so that Nextra's blog theme recognizes it as a blog post and displays the date. We inserted those if missing. You can also add other frontmatter fields likedescriptionortagsif needed. -

Running this endpoint will consume your LLM API credits and create a commit every time. You may want to add basic protection – since we assume single user and no auth, at least keep the endpoint URL secret. For a more robust setup, you could implement a simple auth check (e.g., a secret token in the request header, or restrict access by origin if the request comes from your own site).

-

The content generated by the AI can be adjusted by tweaking the prompt. You might enforce a certain structure or length. Since MDX is essentially Markdown, the AI's output likely will include Markdown syntax, which Nextra can render. If the AI includes any JSX or imports in MDX, ensure your blog setup can handle it (this is advanced usage; our prompt didn't ask for such).

-

If using Anthropic's Claude in this setup: you could install Anthropic's SDK (or use fetch) and call Claude's API instead of OpenAI. The logic remains largely the same: send the prompt, get the completion. Claude might follow the instructions about frontmatter well if prompted similarly. The main difference is the API call syntax. (For example, using the

anthropicNPM package, you would create a client with your API key and callclient.completewith the appropriate parameters and prompt format, then getcompletion.completiontext.)

Adapting and Going Further

We've demonstrated two ways to integrate an AI assistant with a blog repository using the Model Context Protocol idea. Depending on your needs, you might choose one or even combine them. For instance, you could use GitHub Actions for scheduled or on-demand bulk generation (serverless and free for small usage), while also having the Next.js API for interactive use on your deployed site.

Because MCP is all about standardizing how AI interacts with tools, you could generalize this approach. Today we focused on a "blog post generation" tool, but you could imagine adding other MCP servers to your system. For example, you might integrate a Git MCP server for general repository browsing or a Google Drive server for retrieving reference files. In fact, the open-source MCP servers already include integrations for many platforms (GitHub, GitLab, Slack, etc.) (GitHub - modelcontextprotocol/servers: Model Context Protocol Servers (opens in a new tab)). Those could be plugged into an AI agent with minimal setup. Our custom implementation here mirrors the purpose of the MCP GitHub server – providing the AI a way to perform repository operations (in our case, creating a file via commit).

Multi-step Interaction: Our current design simply takes a prompt and generates a single post. But you might want a more interactive process – for example, a chat where you and the AI brainstorm the content before finalizing the post. You can build a UI on top of the Next.js API to facilitate that: perhaps have a chat interface, and when you are satisfied with the AI's answer, you press a "Publish" button that sends the final answer to the generate-post endpoint. This way, the AI can incorporate your feedback (via multiple messages) and you have more control over the output.

Review and Moderation: If you plan to use this in production, consider adding a human review step. The GitHub Actions approach could be modified to open a Pull Request instead of committing to main, allowing you to review/edit the MDX file and then merge it. This adds a safety net for the AI's content. You could also use OpenAI's content filters or other moderation APIs on the AI's output before publishing, to ensure nothing inappropriate gets posted.

Connecting to the MCP Ecosystem: While our solution works with direct API calls, it's conceptually aligned with MCP's goals. If you were using an AI assistant platform that supports MCP (for example, Claude Desktop or other IDE integrations), you could register a similar "blog post" tool with it. In that scenario, you'd run an MCP server that exposes a create_post tool. The AI agent could then dynamically decide to invoke that tool when asked to publish content. The Model Context Protocol formalizes such interactions (tools are defined with names, parameters, and the client uses JSON-RPC to call them). For a deeper dive into building formal MCP servers/clients, you can refer to the official MCP documentation and examples (Model Context Protocol (MCP) Explained (opens in a new tab)) (Build and deploy Remote Model Context Protocol (MCP) servers to Cloudflare (opens in a new tab)).

Conclusion

We built a system that bridges an AI model with a content repository – effectively letting the AI become a content creator on our blog. We showed a GitHub Actions workflow that leverages GitHub's infrastructure to operate without a dedicated server, and a Next.js-based service that offers on-demand interaction. Both achieve the same end result: an MDX blog article generated by an AI and published via Git commit.

This demonstrates the power of treating integrations as modular "tools" for AI. By giving the model a way to perform an action (in this case, writing to our blog), we expand its capabilities beyond static Q&A. MCP's vision is exactly this – a standardized way for AI to interface with external systems – and our blog post generator is a concrete example of that vision in action. With a consistent protocol, an AI agent could just as easily plug into a dozen other services. Today it's a blog post; tomorrow it could be updating a database, or scheduling a tweet, all through the same unified interface (Model Context Protocol (MCP) Explained (opens in a new tab)).

Feel free to adapt the code to your own needs: adjust the prompting, add more error handling, or integrate other LLM providers. Happy blogging with your new AI collaborator!

Sources:

- Anthropic, Introducing the Model Context Protocol (MCP) – Open standard for connecting AI assistants to external systems (Model Context Protocol (MCP) Explained (opens in a new tab)).

- Cloudflare, Bringing MCP to the masses – MCP lets AI agents take actions like publishing blog posts via external tools (Build and deploy Remote Model Context Protocol (MCP) servers to Cloudflare (opens in a new tab)).

- Model Context Protocol Open-Source Servers – Includes a GitHub server for repository operations (file management, GitHub API integration) (GitHub - modelcontextprotocol/servers: Model Context Protocol Servers (opens in a new tab)).