OpenAI Function Calling: Examples to Get Started

Updated on

In the ever-evolving landscape of artificial intelligence (AI), OpenAI’s function calling (now exposed via tools) has become one of the most important building blocks for real applications. It lets you connect powerful language models like gpt-4.1 and gpt-4.1-mini to your own APIs, databases, and business logic.

Instead of asking the model to “write some JSON” and hoping it follows your format, you describe functions in JSON Schema, and the model returns a structured function call you can safely execute in your code.

In this guide, you’ll learn:

- What OpenAI function calling is and how it works today

- How to define tools/functions with JSON Schema

- Practical examples: scheduling meetings, getting stock prices, booking travel

- How to use modern features like Structured Outputs to make function calling more reliable

- Best practices, common pitfalls, and FAQs

What Is OpenAI Function Calling (Tools)?

Function calling allows a model to respond with machine-readable function calls instead of plain text. In the modern API, these are represented as tools:

- You define tools with:

type: "function"function.name,function.descriptionfunction.parameters(a JSON Schema describing the arguments)

- You send these tools along with your prompt.

- The model chooses whether to call a tool and returns a tool call with a function name and JSON arguments.

- Your application:

- Parses the tool call,

- Executes the corresponding function in your backend,

- Optionally sends the result back to the model to generate a final, user-facing answer.

Under the hood, function calling is supported in:

- The Responses API (

POST /v1/responses) – the recommended way for new applications. - The classic Chat Completions API (

POST /v1/chat/completions) – still widely used and supported.

Historically, function calling used the functions and function_call parameters. These are now deprecated in favor of tools and tool_choice, so all new code should use the new style.

Basic Example: Schedule a Meeting (Chat Completions API)

Let’s start with a simple example using the Chat Completions API. We’ll ask the model to schedule a meeting and let it return structured arguments for a schedule_meeting function.

JSON request (conceptual)

{

"model": "gpt-4.1-mini",

"messages": [

{

"role": "user",

"content": "Schedule a meeting with John Doe next Tuesday at 3 PM."

}

],

"tools": [

{

"type": "function",

"function": {

"name": "schedule_meeting",

"description": "Schedule a meeting in the calendar.",

"parameters": {

"type": "object",

"properties": {

"attendee": {

"type": "string",

"description": "Name of the attendee for the meeting."

},

"date": {

"type": "string",

"description": "Date of the meeting in ISO 8601 format."

},

"time": {

"type": "string",

"description": "Time of the meeting, including time zone."

}

},

"required": ["attendee", "date", "time"],

"additionalProperties": false

},

"strict": true

}

}

],

"tool_choice": "auto"

}The model’s reply will contain something like:

{

"role": "assistant",

"tool_calls": [

{

"id": "call_123",

"type": "function",

"function": {

"name": "schedule_meeting",

"arguments": "{\"attendee\":\"John Doe\",\"date\":\"2025-11-18\",\"time\":\"15:00 Europe/Berlin\"}"

}

}

]

}Your backend can parse arguments, call your real schedule_meeting function (for example, using Google Calendar or Outlook), then optionally send the result back to the model for a friendly confirmation message.

Example: Stock Price Lookup

Here’s a more “API-like” example: calling a get_stock_price function based on natural language.

Request with a get_stock_price tool

{

"model": "gpt-4.1-mini",

"messages": [

{

"role": "user",

"content": "What's the current price of Apple stock?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_stock_price",

"description": "Get the current stock price for a ticker symbol.",

"parameters": {

"type": "object",

"properties": {

"ticker_symbol": {

"type": "string",

"description": "Ticker symbol of the stock, e.g. AAPL."

},

"currency": {

"type": "string",

"enum": ["USD", "EUR", "GBP"],

"description": "Currency for the price."

}

},

"required": ["ticker_symbol"],

"additionalProperties": false

},

"strict": true

}

}

],

"tool_choice": "auto"

}When the user asks “What’s the current price of Apple stock?”, the model will produce a tool call like:

{

"type": "function",

"function": {

"name": "get_stock_price",

"arguments": "{\"ticker_symbol\":\"AAPL\",\"currency\":\"USD\"}"

}

}You then:

- Call your real stock-price API.

- Return the result to the model in a follow-up request.

- Let the model generate a human-friendly explanation.

Example: Travel Booking with Function Calling

Function calling shines when prompts are messy and human, but your backend needs clean, structured parameters.

Consider this utterance:

“I need to book a trip from Bonn to Amsterdam for my wife, mother, my two sons, my daughter, and me. The airline must fly direct.”

We want the model to extract:

departuredestinationnumber_peopletravel_mode(e.g. plane / train)

Tool definition for book_travel

{

"model": "gpt-4.1-mini",

"messages": [

{

"role": "user",

"content": "I need to book a trip from Bonn to Amsterdam for my wife, mother, my two sons and daughter, and me. The airline must fly direct."

}

],

"tools": [

{

"type": "function",

"function": {

"name": "book_travel",

"description": "Search or book transportation for a group of travelers.",

"parameters": {

"type": "object",

"properties": {

"departure": {

"type": "string",

"description": "City or airport you are traveling from."

},

"destination": {

"type": "string",

"description": "City or airport you are traveling to."

},

"number_people": {

"type": "integer",

"description": "How many people are traveling."

},

"travel_mode": {

"type": "string",

"enum": ["plane", "train", "bus", "car"],

"description": "Preferred mode of travel."

},

"non_stop_only": {

"type": "boolean",

"description": "Whether only non-stop options are allowed."

}

},

"required": ["departure", "destination", "number_people"],

"additionalProperties": false

},

"strict": true

}

}

],

"tool_choice": "auto"

}The model might emit:

{

"name": "book_travel",

"arguments": "{\"departure\":\"Bonn\",\"destination\":\"Amsterdam\",\"number_people\":6,\"travel_mode\":\"plane\",\"non_stop_only\":true}"

}From here you can plug this directly into your flight-search service.

Using the Modern OpenAI SDKs (Python & JavaScript)

You will usually not send raw JSON by hand. Instead, you’ll use the official SDKs and work with strongly-typed responses.

Python example (Chat Completions + tools)

from openai import OpenAI

client = OpenAI()

tools = [

{

"type": "function",

"function": {

"name": "schedule_meeting",

"description": "Schedule a meeting in the calendar.",

"parameters": {

"type": "object",

"properties": {

"attendee": {"type": "string"},

"date": {"type": "string"},

"time": {"type": "string"},

},

"required": ["attendee", "date", "time"],

"additionalProperties": False,

},

"strict": True,

},

}

]

completion = client.chat.completions.create(

model="gpt-4.1-mini",

messages=[

{

"role": "user",

"content": "Schedule a meeting with John Doe next Tuesday at 3 PM.",

}

],

tools=tools,

tool_choice="auto",

)

tool_calls = completion.choices[0].message.tool_calls

if tool_calls:

call = tool_calls[0]

args = client.responses._client._utils.json.loads(call.function.arguments)

# Your real implementation:

# result = schedule_meeting(**args)JavaScript example (Node.js)

import OpenAI from "openai";

const client = new OpenAI();

const tools = [

{

type: "function",

function: {

name: "get_stock_price",

description: "Get the current price for a given ticker symbol.",

parameters: {

type: "object",

properties: {

ticker_symbol: {

type: "string",

description: "Stock ticker symbol, e.g. AAPL",

},

},

required: ["ticker_symbol"],

additionalProperties: false,

},

strict: true,

},

},

];

const response = await client.chat.completions.create({

model: "gpt-4.1-mini",

messages: [

{ role: "user", content: "What's the current price of Apple stock?" },

],

tools,

tool_choice: "auto",

});

const toolCalls = response.choices[0].message.tool_calls;Structured Outputs: More Reliable Function Calling

JSON Mode ensures the model returns valid JSON, but not necessarily JSON that matches your schema. Structured Outputs is a newer feature that tightens this by enforcing your JSON Schema when the model calls tools:

- Set

"strict": truein your tool’sfunctiondefinition. - The model’s arguments are then guaranteed to conform to your schema (types, required fields, no extra properties), dramatically reducing parsing and validation errors.

This is especially useful when:

- Extracting complex, nested data from unstructured text

- Building multi-step workflows where each step depends on precise structured data

- Generating parameters for downstream systems like SQL, analytics pipelines, or data visualizations

Even with Structured Outputs, you should still treat values as untrusted input (e.g., check ranges, handle missing IDs, validate business rules).

Design Patterns & Best Practices

1. Keep tools small and focused

Instead of one giant do_everything function, define small, composable tools:

get_user_profileget_user_orderscreate_support_ticketschedule_meeting

This makes schemas easier to maintain and improves the model’s ability to choose the right tool.

2. Use clear names and descriptions

- Function names should be verbs:

create_invoice,fetch_weather,book_travel. - Descriptions should say when to use the function, not just what it does.

Bad:

“Get data from the system.”

Good:

“Use this function whenever the user asks about their recent orders or order history.”

3. Be strict with schemas

- Use

requiredfields andadditionalProperties: false. - Use enums for known options (

"enum": ["plane", "train"]). - Add simple constraints where useful (string formats, minimum integers, etc.).

4. Validate and log everything

- Always validate tool arguments server-side before executing.

- Log tool calls and natural language prompts for debugging.

- Consider retries (possibly with a short, corrective system message) when validation fails.

5. Chain tools when needed

For more complex workflows (e.g., fetch user → fetch orders → summarize), you can:

- Let the model call multiple tools in one response (parallel tool calls), or

- Orchestrate step-by-step from your backend, feeding previous tool results back into the model.

A Concrete Multi-Step Example: Math with Mixed Formats

Let’s revisit a classic example that shows why function calling is more than a gimmick.

“What’s the result of 22 plus 5 in decimal added to the hexadecimal number A?”

We can solve this by defining two tools:

add_decimal(a: number, b: number)add_hex(a: string, b: string)

The workflow:

- The model calls

add_decimalwith arguments{ "a": 22, "b": 5 }→ your code returns27. - You send the result and the original question back to the model.

- The model then calls

add_hexwith arguments{ "a": "27", "b": "A" }. - Your code returns

31, and the model explains the final result to the user.

This pattern generalizes to any domain: finance, analytics, data viz, DevOps, BI dashboards, etc. Function calling + your own tools = a flexible, domain-aware AI assistant.

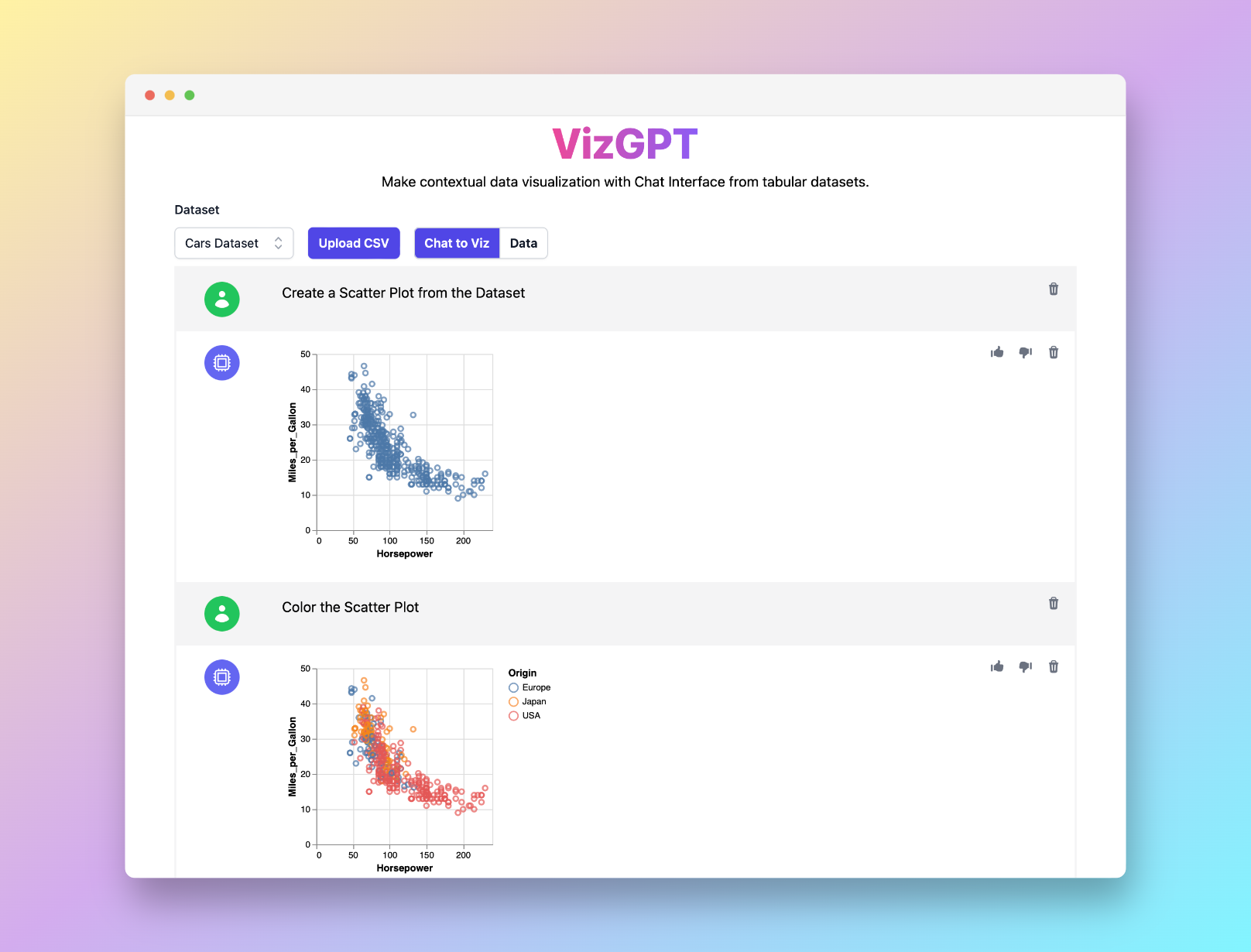

Want to Generate Any Type of Charts Easily with the Power of ChatGPT? Try VizGPT (opens in a new tab) — describe your data and chart in natural language and get beautiful charts with no code.

Related OpenAI Updates (High-Level)

OpenAI has continued to improve the function calling and tooling experience with:

- Larger context windows on modern models, making it easier to work with long conversations, documents, or schemas.

- Structured Outputs for tools and response formats, ensuring model output adheres to your JSON Schema.

- A richer Responses API and tools ecosystem (web search, file search, code execution, and more), making it easier to build full agentic workflows with the same function-calling concepts.

For pricing and the latest model list, always check the official OpenAI documentation and pricing page.

Wrapping Up

Function calling is one of the most powerful ways to turn LLMs into practical, reliable pieces of your stack:

- You describe what your functions can do, in JSON Schema.

- The model decides when and how to call them.

- Your backend executes the calls and, together with the model, builds rich experiences for your users.

Whether you’re scheduling meetings, querying stock prices, booking travel, or powering a full BI dashboard with tools like PyGWalker and VizGPT, function calling is the glue between natural language and real actions.

Start simple with a single function, validate everything, then grow into full multi-step agentic workflows as your application matures.

Frequently Asked Questions

-

What is OpenAI’s function calling feature?

Function calling lets you describe functions (tools) with JSON Schema so the model can return a structured function call instead of plain text. The model doesn’t execute anything itself; your application parses the tool call and runs the real function.

-

Which models support function calling?

Modern GPT-4, GPT-4.1, GPT-4o, GPT-4o-mini, and newer GPT-5/o-series models support tools/function calling through the Chat Completions and Responses APIs. Check the OpenAI docs for the latest list of supported models.

-

Should I use the old

functions/function_callparameters?No. Those were the first generation of function-calling parameters and are now considered legacy. New code should use

toolsandtool_choice, which are more flexible and work across newer models and APIs. -

How is this different from JSON Mode or Structured Outputs?

- JSON Mode (

response_format: { "type": "json_object" }) ensures the model returns valid JSON, but doesn’t enforce your schema. - Function calling with Structured Outputs (

strict: truein the tool definition) ensures the arguments match the JSON Schema you provided. - You can combine function calling with JSON Mode for even more control.

- JSON Mode (

-

What are some common use cases?

- Chatbots that call external APIs (weather, CRM, ticketing, internal tools)

- Natural language → API calls, SQL queries, search filters

- Data extraction pipelines that turn unstructured text into structured records

- Multi-step “agent” workflows for automation, analytics, and BI