Databricks vs Snowflake: A Comprehensive Comparison for Data Analysts and Data Scientists

Updated on

As data continues to grow in importance and complexity, data analysts and data scientists need to leverage the most suitable tools to derive valuable insights. In this comprehensive comparison, we will explore two of the most popular data platforms: Databricks and Snowflake. We will examine their features, benefits, and drawbacks to help you make an informed decision about the right tool for your needs. Additionally, we will include relevant internal links to provide further resources and context.

The AI Agent for Jupyter Notebooks

An AI agent that understands your notebooks, writes code and executes cells so you can focus on insights. Accelerate your data science workflow with intelligent automation that learns from your coding patterns.

Overview

Databricks (opens in a new tab) is a cloud-based platform that provides a unified analytics workspace for big data processing, machine learning, and AI applications. It is built on top of the popular Apache Spark framework, enabling users to scale their data processing and analysis tasks efficiently.

Snowflake (opens in a new tab), on the other hand, is a cloud-based data warehouse solution that focuses on storage, management, and analytics of structured and semi-structured data. It is designed to support massive parallel processing (MPP), which allows for fast querying and analysis of data.

Key Features

Databricks

- Unified Analytics Platform: Databricks combines data engineering, data science, and AI capabilities in one platform, enabling collaboration between different teams and roles.

- Apache Spark: As a Spark-based platform, Databricks offers high performance and scalability for big data processing and machine learning workloads.

- Interactive Workspace: Databricks provides an interactive workspace with support for various languages, including Python, R, Scala, and SQL. It also features built-in Jupyter Notebook (opens in a new tab) integration.

- MLflow: Databricks includes MLflow, an open-source platform for managing the end-to-end machine learning lifecycle, simplifying model development and deployment.

- Delta Lake: Delta Lake is an open-source storage layer that brings ACID transactions and other data reliability features to your data lake, improving data quality and consistency.

Snowflake

- Cloud Data Warehouse: Snowflake's primary focus is on providing a scalable and easy-to-use cloud-based data warehouse solution.

- Unique Architecture: Snowflake's architecture separates storage, compute, and cloud services, allowing for independent scaling and cost optimization.

- Support for Structured and Semi-Structured Data: Snowflake can handle both structured and semi-structured data, such as JSON, Avro, Parquet, and XML.

- Data Sharing and Integration: Snowflake offers native data sharing capabilities, simplifying data collaboration between organizations. It also provides a wide range of data integration tools (opens in a new tab) to streamline data ingestion and processing.

- Security and Compliance: Snowflake places a strong emphasis on security and compliance, with features such as encryption, role-based access control, and support for various compliance standards.

Comparing Performance, Scalability, and Cost

Performance

Databricks, being built on Apache Spark, is optimized for high-performance data processing and machine learning tasks. In comparison, Snowflake's focus on data warehousing translates to fast query execution and analytics. However, when it comes to machine learning and AI workloads, Databricks has a clear advantage.

Scalability

Both Databricks and Snowflake are designed to scale with your data needs. Databricks leverages Spark's capabilities to handle big data processing, while Snowflake's unique architecture enables independent scaling of storage and compute resources. This flexibility allows organizations to tailor their infrastructure based on their specific requirements and budget constraints.

Cost

Databricks and Snowflake offer pay-as-you-go pricing models, which means you only pay for the resources you consume. However, their pricing structures differ in some key aspects. Databricks charges based on virtual machine instances, data storage, and data transfer, while Snowflake's pricing is determined by the volume of stored data, the number of compute resources (also known as "warehouses"), and the amount of ingested data.

It is important to carefully evaluate your organization's data processing and storage needs to determine which platform offers the most cost-effective solution. Keep in mind that cost optimization often depends on efficient resource management and leveraging features such as auto-scaling and auto-pause.

Integration and Ecosystem

Both Databricks and Snowflake offer extensive integration options with popular data sources, tools, and platforms.

-

Databricks integrates seamlessly with big data processing tools like Hadoop, as well as data storage services such as Amazon S3, Azure Blob Storage, and Google Cloud Storage. Additionally, it supports popular data visualization tools like Tableau and Power BI.

-

Snowflake, being a data warehouse solution, offers numerous connectors and integration options for data ingestion and ETL processes, including popular tools like Fivetran, Matillion, and Talend. It also supports integration with business intelligence platforms like Looker, Tableau, and Power BI.

When it comes to the overall ecosystem, Databricks has a strong focus on the Apache Spark community, while Snowflake is more geared towards the data warehousing and analytics space. Depending on your organization's specific needs, one platform may provide better support and resources for your use case.

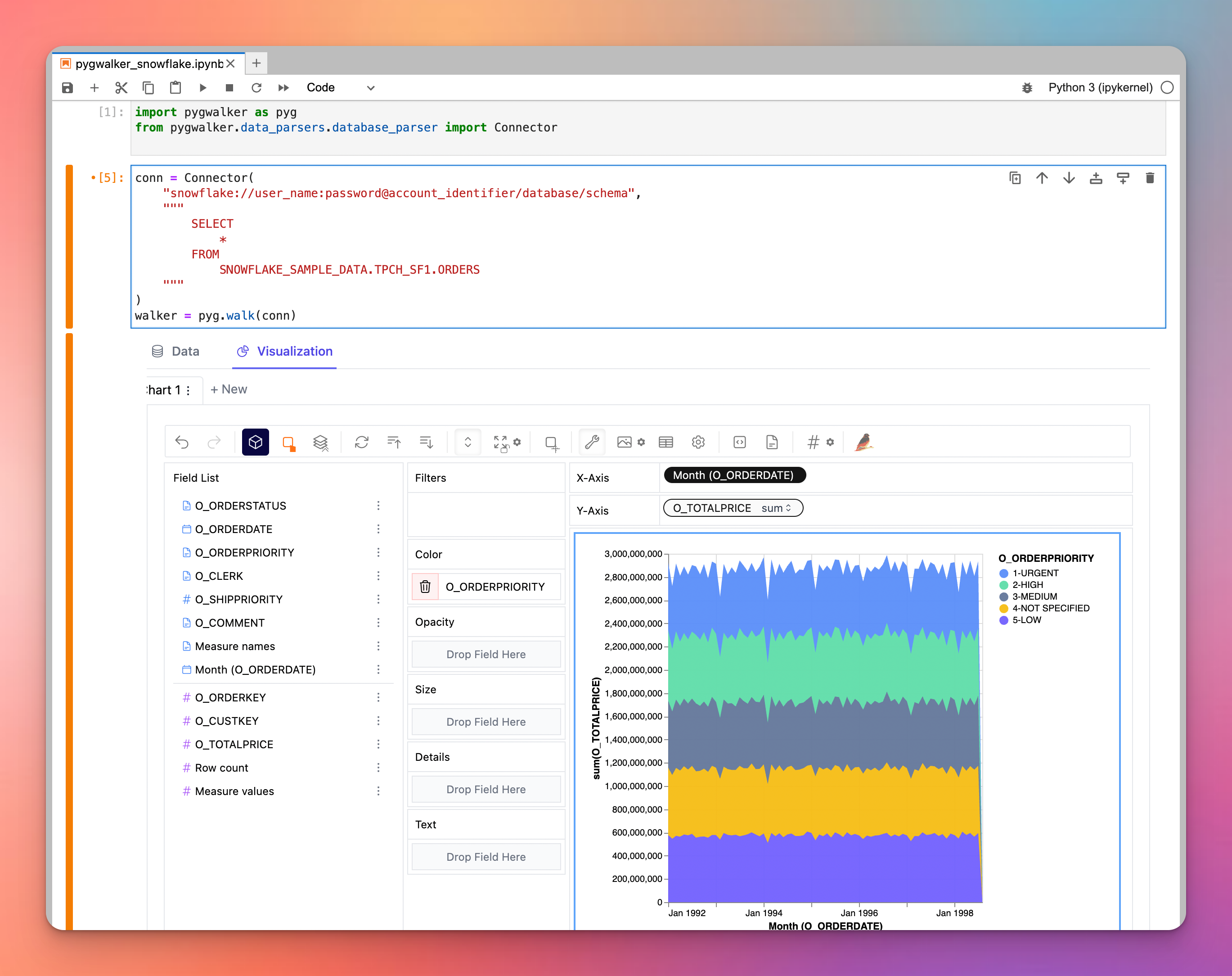

By the way, how about try to visual explore your data in snowflake/databricks with PyGWalker?

If you're on the lookout for a potent visualization tool, consider pygwalker a groundbreaking Python library that morphs dataframes into a Tableau-esque visualization application. Notably, pygwalker can delegate its queries to external engines, such as Snowflake. This synergy allows users to harness Snowflake's compute power while delivering stellar visualizations, essentially bridging the best of both worlds. Dive into pygwalker to elevate your data visualization game. PyGWalker now is launched on Kanaries (opens in a new tab). You can now get a 50% discount on the first month of your subscription. Check pygwalker's homepage (opens in a new tab) for more details.

Conclusion

Databricks and Snowflake are both powerful platforms designed to address different aspects of data processing and analytics. Databricks shines in big data processing, machine learning, and AI workloads, while Snowflake excels in data warehousing, storage, and analytics. To make the best choice for your organization, it's crucial to consider your specific requirements, budget, and integration needs.