Unveiling ChatGPT-4O: A Quantum Leap in Conversational AI

Updated on

OpenAI has once again pushed the boundaries of what's possible in the realm of artificial intelligence with the launch of ChatGPT-4O. This latest iteration of the AI model introduces groundbreaking features that promise to revolutionize how we interact with technology. Let's dive into the exciting updates and explore how they can benefit us and inspire innovative applications.

1. Real-Time Voice Communication

One of the most significant advancements in ChatGPT-4O is its ability to engage in real-time voice communication. Unlike previous versions, which required a brief pause for voice processing, ChatGPT-4O responds instantaneously. This improvement makes conversations with AI feel more natural and fluid, enhancing the user experience.

Benefits and Applications:

- Enhanced Customer Service: Businesses can implement real-time voice assistants to provide instant support, reducing wait times and improving customer satisfaction.

- Interactive Learning: Educational platforms can offer real-time tutoring sessions, making learning more engaging and responsive to students' needs.

- Hands-Free Assistance: Real-time voice communication allows for more effective hands-free operation in various contexts, such as driving or performing complex tasks in professional environments.

2. Emotional Nuance in AI Voice

ChatGPT-4O's voice now carries more emotional depth, making interactions more empathetic and human-like. This development is crucial for creating more meaningful and effective communication with AI.

Benefits and Applications:

- Mental Health Support: AI-driven mental health apps can provide more empathetic responses, offering better emotional support and connection.

- Entertainment and Storytelling: AI can bring characters to life in audiobooks, games, and interactive stories with more expressive and engaging voices.

- Personal Assistants: Virtual assistants can offer more personalized and emotionally attuned responses, improving user satisfaction and interaction quality.

3. Real-Time Vision Capabilities

The new real-time vision capabilities of ChatGPT-4O enable it to see and understand visual inputs, providing an end-to-end ability that seamlessly integrates vision and voice outputs.

Benefits and Applications:

- Augmented Reality (AR): Enhancing AR experiences with real-time visual and verbal feedback, making applications more interactive and informative.

- Healthcare: Real-time visual analysis can assist in medical diagnostics, where AI can provide instant insights based on visual data, such as X-rays or MRI scans.

- Accessibility: Helping visually impaired individuals by describing their surroundings and reading text or signs in real time.

4. Code Reading Through Vision

ChatGPT-4O can read and understand code through visual inputs, eliminating the need for OCR (Optical Character Recognition) models. This feature streamlines the process of working with code, whether it's handwritten or displayed on a screen.

Benefits and Applications:

- Software Development: Developers can quickly debug and analyze code by showing it to the AI, speeding up the development process.

- Education: Coding bootcamps and tutorials can leverage this capability to provide instant feedback on students' handwritten code.

- Documentation: Easier and faster interpretation of code snippets from textbooks or screenshots, aiding learning and reference.

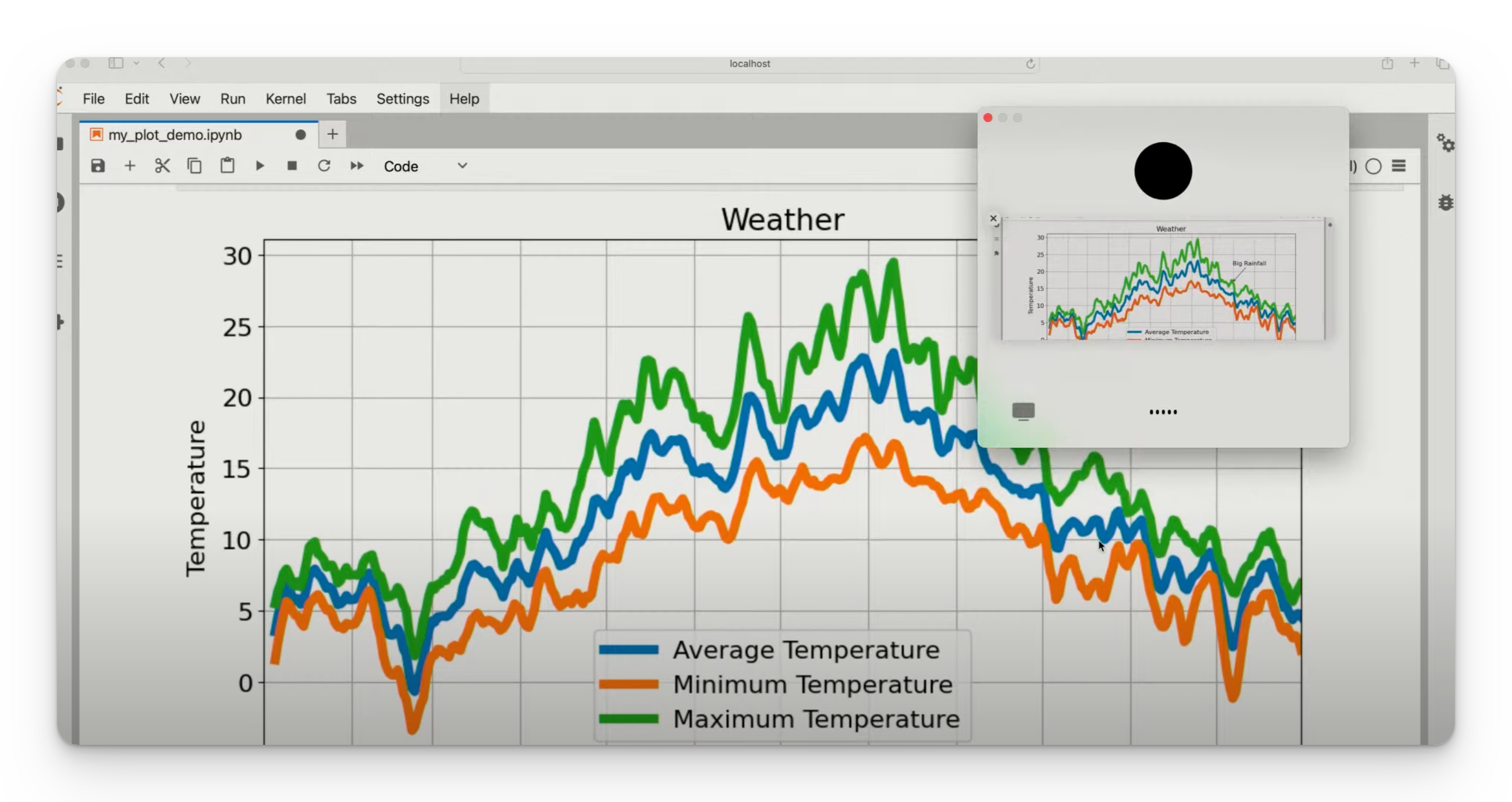

5. Data and Chart Reading

With its enhanced vision capabilities, ChatGPT-4O can read and interpret charts and data visualizations. This ability transforms how we interact with data, making it more accessible and actionable.

Benefits and Applications:

- Business Intelligence: Real-time analysis of charts and data can provide instant insights during meetings, aiding decision-making processes.

- Education: Teachers can use AI to help students understand complex data visualizations, making learning more interactive and effective.

- Research: Researchers can quickly interpret data from charts and graphs, streamlining the analysis process and improving productivity.

Want to try how this feature can influnce your data analysis? Check out Kanaries AI Analytic to use gpt4o powered Agent at Data Visualization (opens in a new tab) now.

6. Improved Translation Abilities

ChatGPT-4O boasts significantly improved translation capabilities, making cross-language communication smoother and more accurate.

Benefits and Applications:

- Global Collaboration: Businesses and teams can communicate more effectively across language barriers, facilitating international collaboration.

- Travel and Tourism: Tourists can navigate foreign countries with ease, thanks to accurate and real-time translation of signs, menus, and conversations.

- Education: Language learning apps can provide more accurate translations and context, enhancing the learning experience for students.

GPT-4O API

OpenAI also release the GPT4-O API this time. Here are what's changed in gpt4o comparing with gpt4-Turbo.

| Feature | Description |

|---|---|

| High intelligence | GPT-4 Turbo-level performance on text, reasoning, and coding intelligence, setting new high watermarks on multilingual, audio, and vision capabilities. |

| 2x faster | GPT-4o is 2x faster at generating tokens than GPT-4 Turbo. |

| 50% cheaper pricing | GPT-4o is 50% cheaper than GPT-4 Turbo, costing $5 per million input tokens and $15 per million output tokens. |

| 5x higher rate limits | GPT-4o has 5x the rate limits of GPT-4 Turbo, up to 10 million tokens per minute. Rate limits will ramp up to this level for high usage developers in the coming weeks. |

| Improved vision | GPT-4o has enhanced vision capabilities across the majority of tasks. |

| Improved non-English language capabilities | GPT-4o uses a new tokenizer for more efficient non-English text tokenization and has improved capabilities in non-English languages. |

| Context window and knowledge cut-off | GPT-4o has a 128K context window and a knowledge cut-off date of October 2023. |

| Video understanding in API | GPT-4o supports understanding video (without audio) via vision capabilities by converting videos to frames (2-4 frames per second) for input. |

| Audio support in API | GPT-4o in the API does not yet support audio but aims to bring this modality to trusted testers in the coming weeks. |

| Image generation support in API | GPT-4o in the API does not support generating images. DALL-E 3 API is recommended for this purpose. |

| Recommendation for users | Users of GPT-4 or GPT-4 Turbo are recommended to evaluate switching to GPT-4o. API documentation and Playground support for vision and comparing output across models are available. |

This table summarizes the key features and improvements of GPT-4o, highlighting its enhanced performance, cost-effectiveness, and capabilities in vision and multilingual support.

Conclusion

The launch of ChatGPT-4O marks a monumental step forward in the evolution of conversational AI. With real-time voice communication, emotional nuance, real-time vision capabilities, code reading through vision, data and chart interpretation, and improved translation abilities, the potential applications are vast and transformative. As we continue to integrate these advanced AI capabilities into our daily lives, we can expect to see significant improvements in productivity, accessibility, and the overall quality of human-AI interactions. The future is here, and it is more intelligent and interactive than ever before.