How to Effectively Use Pandas Get Dummies Function

Updated on

Python's Pandas library has a lot of robust and versatile functions for data manipulation, and the get_dummies function is one of them. This tutorial aims to help you understand and effectively use this function in your data preprocessing tasks.

Want to quickly create Data Visualizations in Python?

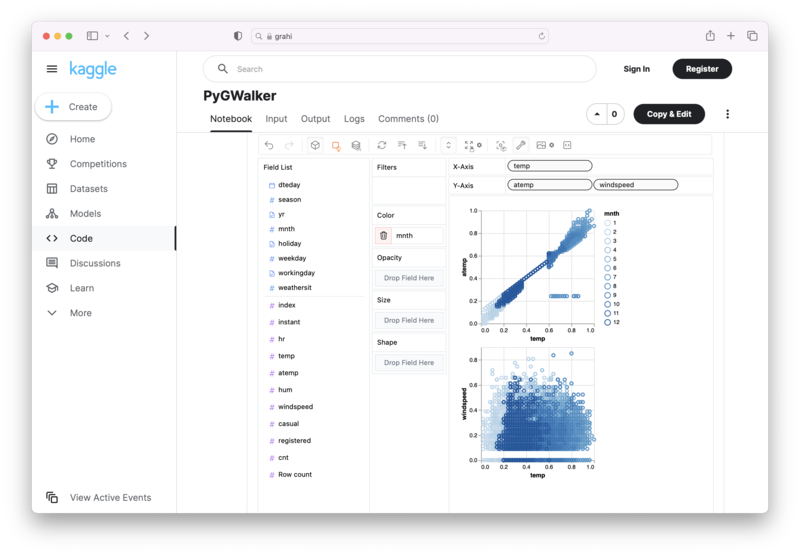

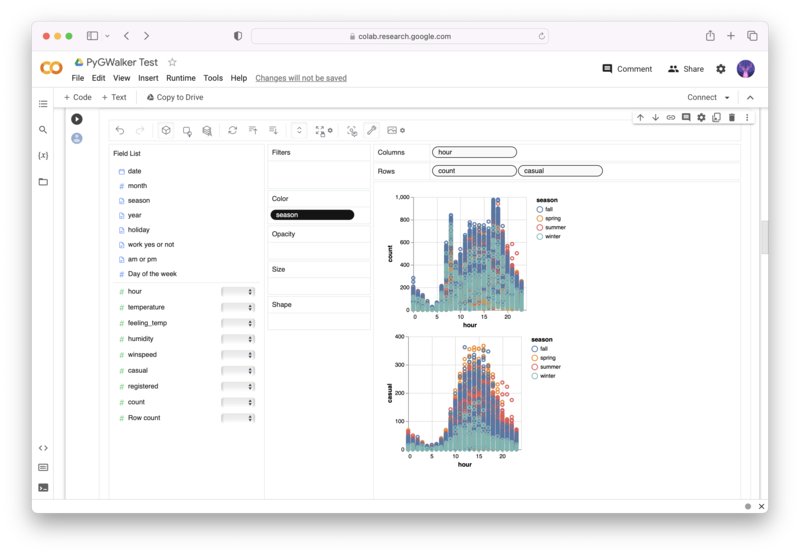

PyGWalker is an Open Source Python Project that can help speed up the data analysis and visualization workflow directly within a Jupyter Notebook-based environments.

PyGWalker (opens in a new tab) turns your Pandas Dataframe (or Polars Dataframe) into a visual UI where you can drag and drop variables to create graphs with ease. Simply use the following code:

pip install pygwalker

import pygwalker as pyg

gwalker = pyg.walk(df)You can run PyGWalker right now with these online notebooks:

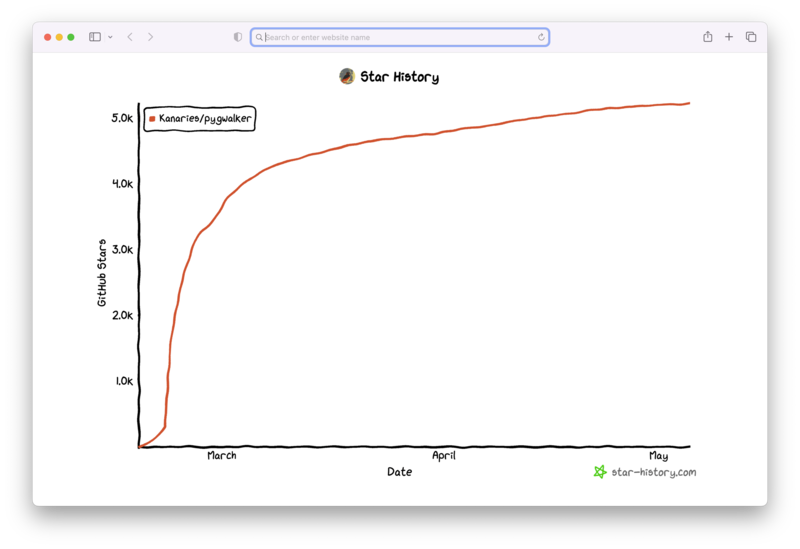

And, don't forget to give us a ⭐️ on GitHub!

Understanding Pandas Get Dummies

Pandas' get_dummies function is a powerful tool when dealing with categorical data. It converts categorical variable(s) into dummy/indicator variables, creating new columns for each unique category in a categorical variable, with ones (1) and zeros (0) indicating the presence or absence of the category in the original row.

Why does this matter? Machine learning algorithms typically work with numerical data. Hence, categorical data often needs to be transformed into a numerical format, which is where get_dummies comes into play.

import pandas as pd

# Example data

data = pd.DataFrame({'pets': ['cat', 'dog', 'bird', 'cat']})

# Applying get_dummies

dummies_data = pd.get_dummies(data)

print(dummies_data)This will output:

pets_bird pets_cat pets_dog

0 0 1 0

1 0 0 1

2 1 0 0

3 0 1 0The Anatomy of Get Dummies Function

The get_dummies function has several parameters to allow for granular control over its operation. Here's a brief overview of these parameters:

data: The input DataFrame or Series from which to generate dummy variables.prefix: Optional string prefix for the column names of the dummy variables.prefix_sep: Optional string separator to use between the prefix and the column name. Default is "_".dummy_na: Boolean to add a column to indicate NaNs, if false NaNs are ignored. Default is False.columns: Optional list of column names to convert into dummy variables. If not specified, it converts all object and category dtype columns.sparse: Boolean to return SparseDataFrame if True, else return regular DataFrame. Default is False.drop_first: Boolean to get k-1 dummies out of k categorical levels by removing the first level. This prevents multicollinearity. Default is False.

Practical Examples of Pandas Get Dummies

Let's delve into more practical examples of using the get_dummies function.

1. Using the prefix parameter

You can use the prefix parameter to add a specific prefix to the new dummy variable column names. This can be helpful in identifying the source of these columns later on.

# Apply get_dummies with prefix

dummies_data_prefix = pd.get_dummies(data, prefix='pets')

print(dummies_data_prefix)2. Dealing with NaN values

When dealing with real-world data, you will often encounter missing values. Using the dummy_na parameter, you can create a separate dummy column for NaN values.

# Example data with NaN

data = pd.DataFrame({'pets': ['cat', 'dog', 'bird', None]})

# Apply get_dummies with dummy_na

dummies_data_nan = pd.get_dummies(data, dummy_na=True

)

print(dummies_data_nan)3. Working with multiple columns

The get_dummies function can be applied to multiple columns at once. In the example below, we create dummy variables for two categorical columns - 'pets' and 'color'.

# Example data with multiple columns

data = pd.DataFrame({'pets': ['cat', 'dog', 'bird', 'cat'], 'color': ['black', 'white', 'black', 'white']})

# Apply get_dummies to multiple columns

dummies_data_multi = pd.get_dummies(data, columns=['pets', 'color'])

print(dummies_data_multi)Conclusion

In conclusion, mastering the pd.get_dummies() function can enhance your data preprocessing capabilities for machine learning projects. It's an indispensable tool for handling categorical data, ensuring it's in the right format for your algorithms.