How to Process Polars JSON Data: A Quick Guide

Updated on

Do you dream of processing JSON data like a pro? Welcome aboard! Today, we will explore Polars, a supercharged DataFrame library in Python and Rust, with a special emphasis on JSON data handling. With its blazing-fast performance and easy-to-use methods, you'll see why Polars is quickly becoming the go-to choice for data scientists around the globe.

Converting JSON String Column to Dict in Polars

Often, you may encounter a DataFrame where a column contains JSON strings. Let's say you want to filter this DataFrame based on specific keys or values within these JSON strings. The most robust way to handle this would be to convert the JSON strings into a dictionary. However, Polars doesn't operate with generic dictionaries. It instead uses a concept known as 'structs', where each dictionary key is mapped to a struct 'field name', and the corresponding dictionary value becomes the value of this field.

Here's the catch though, creating a series of type struct has two main constraints:

- All structs must have the same field names

- The field names must be listed in the same order

But fret not! Polars provides a function called json_path_match which extracts values based on JSONPath syntax. With this, you can check if a key exists and retrieve its value. Here's how you can do it:

import polars as pl

json_list = [

"""{"name": "Maria", "position": "developer", "office": "Seattle"}""",

"""{"name": "Josh", "position": "analyst", "termination_date": "2020-01-01"}""",

"""{"name": "Jorge", "position": "architect", "office": "", "manager_st_dt": "2020-01-01"}""",

]

df = pl.DataFrame(

{

"tags": json_list,

}

).with_row_count("id", 1)

df = df.with_columns([

pl.col('tags').str.json_path_match(r"$.name").alias('name'),

pl.col('tags').str.json_path_match(r"$.office").alias('location'),

pl.col('tags').str.json_path_match(r"$.manager_st_dt").alias('manager start date'),

])In the example above, we've created a DataFrame with a column named 'tags' containing JSON strings. We then use the json_path_match function to extract specific values. These values are then assigned to new columns ('name', 'location', 'manager start date') in our DataFrame.

Note that if a key is not found, json_path_match will return null. We can utilize this fact to filter our DataFrame based on the presence of a certain key.

df = df.filter(pl.col('tags').str.json_path_match(r"$.manager_st_dt").is_not_null())In the line of code above, we're filtering our DataFrame to only include rows where the 'manager_st_dt' key is present in the JSON string.

Reading Large JSON Files as a DataFrame in Polars

When working with large JSON files, you may encounter the following error: "RuntimeError: BindingsError: "ComputeError(Owned("InvalidEOF"))". There could be several reasons behind this error, but one common cause is Polars trying to infer the schema from the first 1000 lines of your JSON file, and then running into a different schema further into the file. This is usually due to some entries having additional keys that weren't present in the initial 1000 lines.

To handle such situations, we can instruct Polars to use a specific schema while reading the file, or we can force Polars to scan the entire file to infer the schema. The latter option might take more time, especially for large files, but will prevent the "InvalidEOF" error.

df = pl.read_json("large_file.json", infer_schema_length=None)Setting infer_schema_length to None forces Polars to scan the whole file while inferring the schema. This operation might be more time-consuming, but it ensures Polars accurately recognizes the schema of the entire file, eliminating the potential for errors.

With these techniques, you can handle JSON data processing tasks in Polars effectively, from transforming JSON strings to managing large JSON files. Polars truly opens up new possibilities for efficient data processing.

Visualize Your Polars Dataframe with PyGWalker

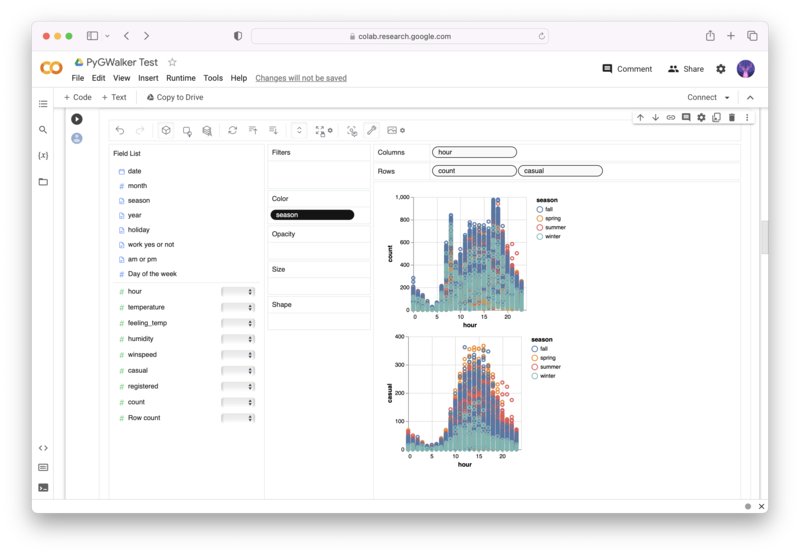

PyGWalker (opens in a new tab) is an Open Source python library that can help you create data visualization from your Polars dataframe with ease.

No need to complete complicated processing with Python coding anymore, simply import your data, and drag and drop variables to create all kinds of data visualizations! Here's a quick demo video on the operation:

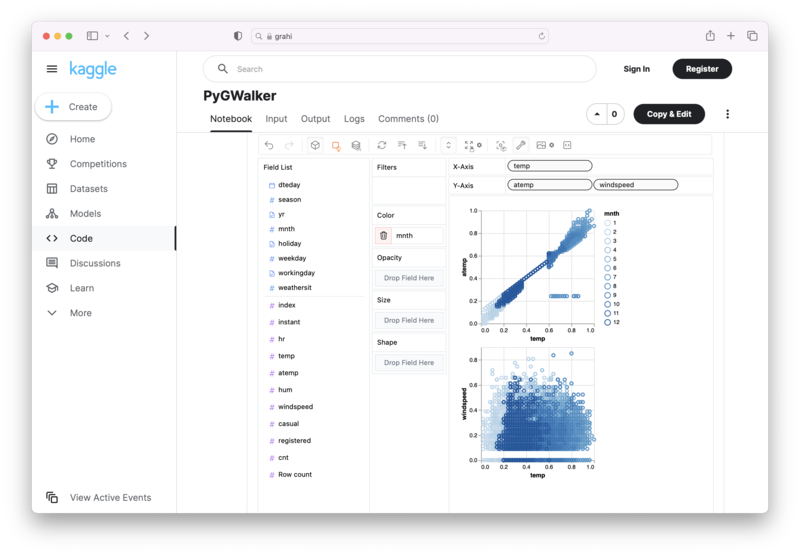

Here's how to use PyGWalker in your Jupyter Notebook:

pip install pygwalker

import pygwalker as pyg

gwalker = pyg.walk(df)Alternatively, you can try it out in Kaggle Notebook/Google Colab:

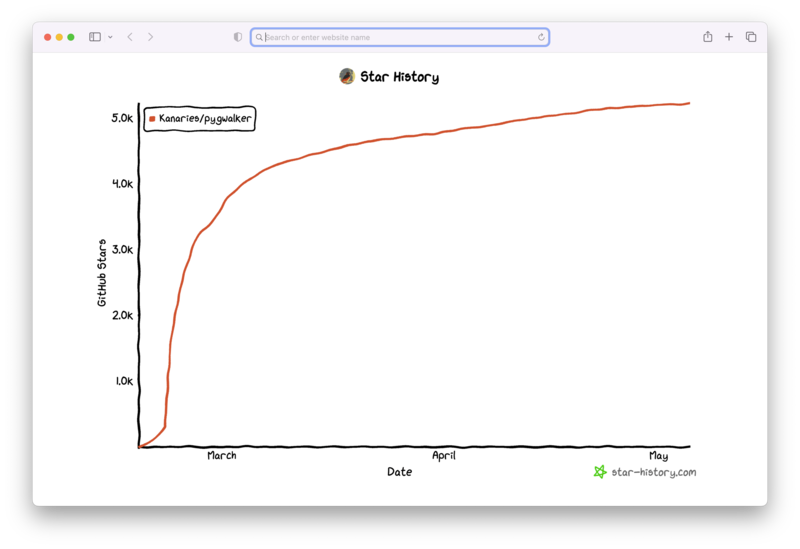

PyGWalker is built on the support of our Open Source community. Don't forget to check out PyGWalker GitHub (opens in a new tab) and give us a star!

Frequently Asked Questions

Here are some commonly asked questions related to handling JSON data in Polars:

Q: Why do I need to convert JSON strings to dict in Polars?

A: Polars doesn't work directly with Python dictionaries. Instead, it uses the concept of 'structs'. Converting JSON strings to structs helps you perform operations based on specific keys or values within these JSON strings.

Q: What does the 'infer_schema_length' parameter do in the 'read_json' function?

A: The 'infer_schema_length' parameter defines how many lines Polars should scan from the beginning of your JSON file to infer its schema. If set to None, Polars will scan the whole file, ensuring a comprehensive schema understanding at the expense of longer loading times.

Q: What happens if a key is not found when using the 'json_path_match' function?

A: If the 'json_path_match' function doesn't find a key, it returns null. This behavior can be utilized to filter data based on the presence or absence of specific keys.